Precomputing Avatar Behavior From Human Motion Data

Creating controllable, responsive avatars is an important problem in computer games and virtual environments. Recently, large collections of motion capture data have been exploited for increased realism in avatar animation and control. Large motion sets have the advantage of accommodating a broad variety of natural human motion. However, when a motion set is large, the time required to identify an appropriate sequence of motions is the bottleneck for achieving interactive avatar control. In this paper, we present a novel method of precomputing avatar behavior from unlabelled motion data in order to animate and control avatars at minimal runtime cost. Based on dynamic programming, our method finds a control policy that indicates how the avatar should act in any given situation. We demonstrate the effectiveness of our approach through examples that include avatars interacting with each other and with the user.

Download

Paper(PDF;5.2MB)

Video(MOV;QuickTime MPEG4, 17MB)

Publications

Jehee Lee and Kang Hoon Lee, Precomputing Avatar Behavior from Human Motion Data, Symposium on Computer Animation 2004, 79-87, August 2004.

Jehee

Lee and Kang Hoon Lee, Precomputing

Avatar Behavior from Human Motion Data, Graphical Models, volume 68, issue

2, 158-174, March 2006. (See

the online version)

Images

A boxer

The animated boxer tracks and punches the standing target. The user can manipulate the target object interactively by dragging the mouse.

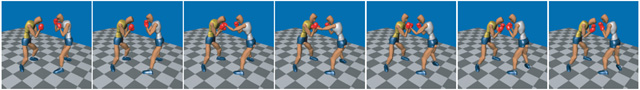

Two boxers

Two animated boxers spar with each other. They are controlled by the same motion data and control policies and consider the head of the opponent to be their target to hit.

Many boxers

To evaluate the performance of our system, we created 30 animated boxers sparring. Our system required about 251 seconds to create 1000 frames of video images. Actually, rendering dominated the computation time. Our system required only 9 seconds to create the same animation with video and sound disabled. In other words, the motion of 30 avatars is computed and controlled at a rate of more than 100 frames per second.

This page is maintained by Kang Hoon Lee, and last updated at May 15, 2006.