|

Controllable

Data Sampling in the Space of Human Poses

|

|

Kyungyong

Yang1 Kibeom Youn1

Kyungho Lee1 Jehee Lee1

1 Seoul

National University

|

|

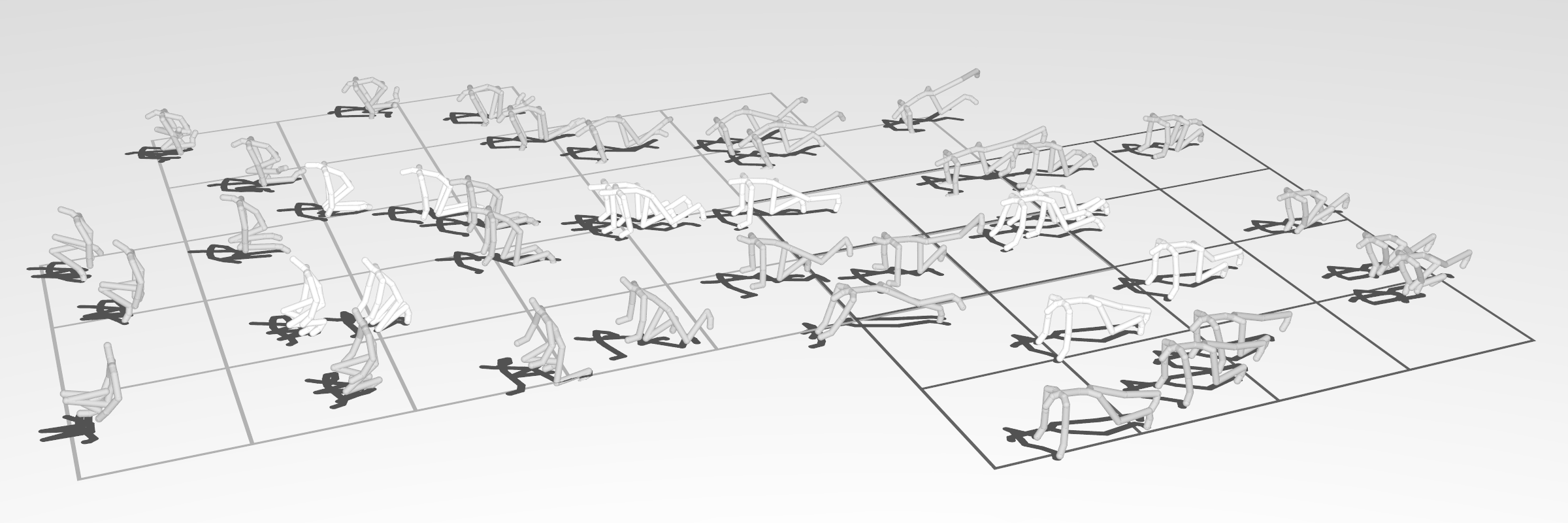

Sampling Results in the Space of Human Poses

|

|

|

|

Abstract

Markerless human pose recognition using a single depth camera plays an

important role in interactive graphics applications and user interface

design. Recent pose recognition algorithms have adopted machine learning

techniques utilizing a large collection of motion capture data. The

effectiveness of the algorithms is greatly influenced by the diversity and

variability of training data. We present a new sampling method that resamples

a collection of human motion data to improve the pose variability and achieve

an arbitrary size and level of density in the space of human poses. The space

of human poses is high-dimensional and thus brute-force uniform sampling is

intractable. We exploit dimensionality reduction and locally stratified

sampling to generate either uniform or application-specifically biased

distributions in the space of human poses. Our algorithm is learned to

recognize such challenging poses such as sit, kneel, stretching and yoga

using a remarkably small amount of training data. The recognition algorithm

can also be steered to maximize its performance for a specific domain of human

poses. We demonstrate that our algorithm performs much better than Kinect SDK

for recognizing challenging acrobatic poses, while performing comparably for

easy upright standing poses.

|

|

Publication

Kyungyong

Yang, Kibeom Youn, Kyungho Lee, and Jehee Lee,

Controllable

Data Sampling in the Space of Human Poses,

Computer

Animation and Virtual Worlds 26, no. 3-4 (2015): 457-467.

Download

Paper (Author’s Manuscripts) (1.7 MB)

|

|

|

Demo

video

Download Video (103 MB)

Download Supplemental Material (3.87 MB)

|