Understanding the Stability of Deep Control Policies for Biped Locomotion

Hwangpil Park1

Ri Yu1

Yoonsang Lee2

Kyungho Lee3

Jehee Lee1

1 Seoul National University

2 Hanyang University

3 NC Soft

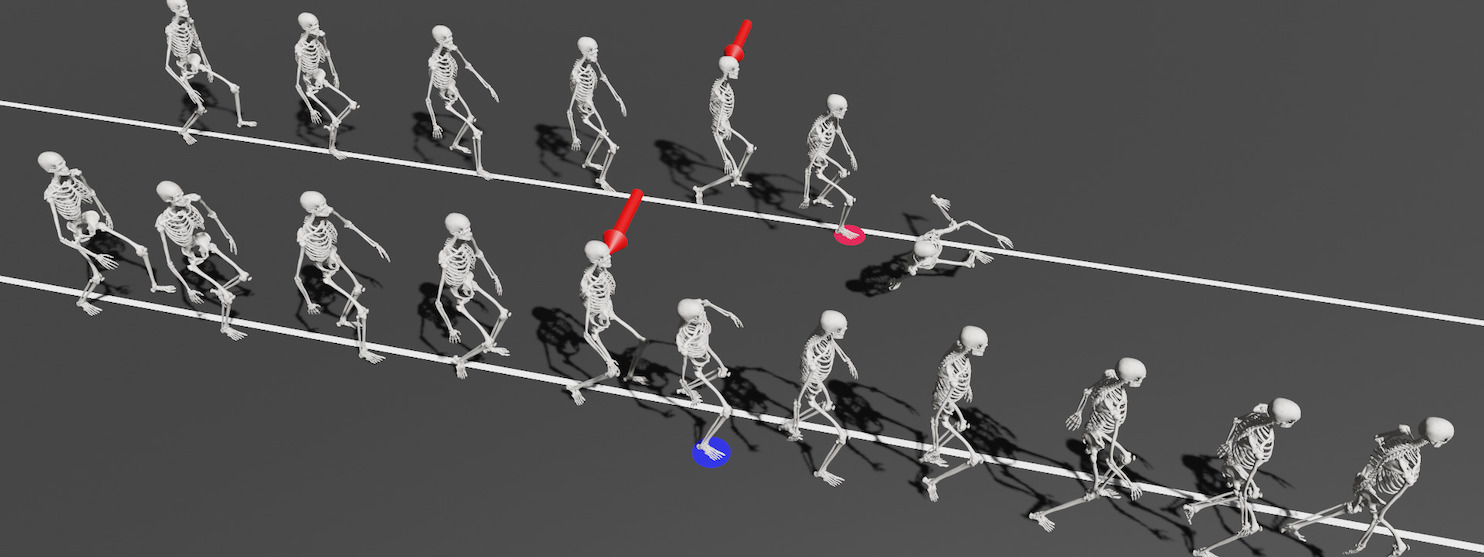

Achieving stability and robustness is the primary goal of biped locomotion control. Recently, deep reinforce learning (DRL) has attracted great attention as a general methodology for constructing biped control policies and demonstrated significant improvements over the previous state-of-the-art. Although deep control policies have advantages over previous controller design approaches, many questions remain unanswered. Are deep control policies as robust as human walking? Does simulated walking use similar strategies as human walking to maintain balance? Does a particular gait pattern similarly affect human and simulated walking? What do deep policies learn to achieve improved gait stability? The goal of this study is to answer these questions by evaluating the push-recovery stability of deep policies compared to human subjects and a previous feedback controller. We also conducted experiments to evaluate the effectiveness of variants of DRL algorithms.

Hwangpil Park, Ri Yu, Yoonsang Lee, Kyungho Lee and Jehee Lee.

Understanding the Stability of Deep Control Policies for Biped Locomotion.

The Visual Computer (2022).

Publisher Link

Hwangpil Park, Ri Yu, Yoonsang Lee, Kyungho Lee and Jehee Lee.

Understanding the Stability of Deep Control Policies for Biped Locomotion.

arXiv:2007.15242 (2020).

PDF (4.3M)

Simulation Code: Github This code is based on MASS(Muscle-Actuated Skeletal System) code.

Simulation Data: Download Zip (34.4 MB)

Human and Data-driven Controller Data: Link