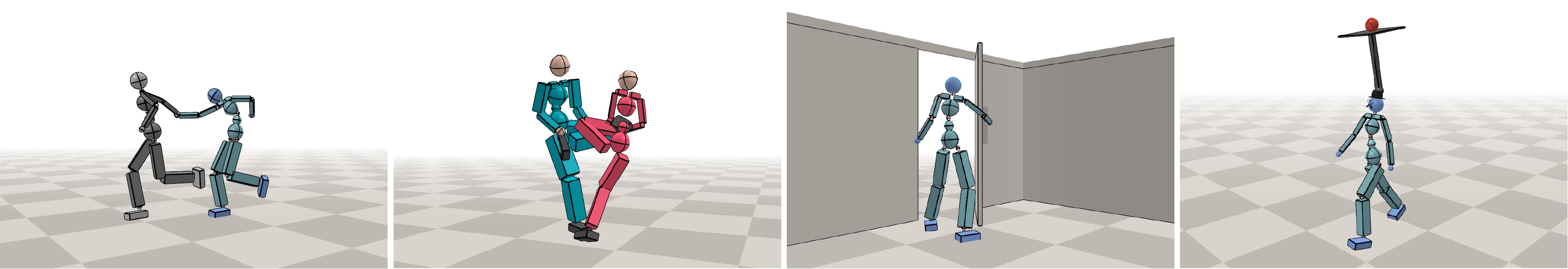

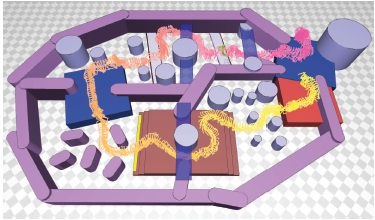

Learning Virtual Chimeras by Dynamic Motion Reassembly, SIGGRAPH Asia 2022. Link

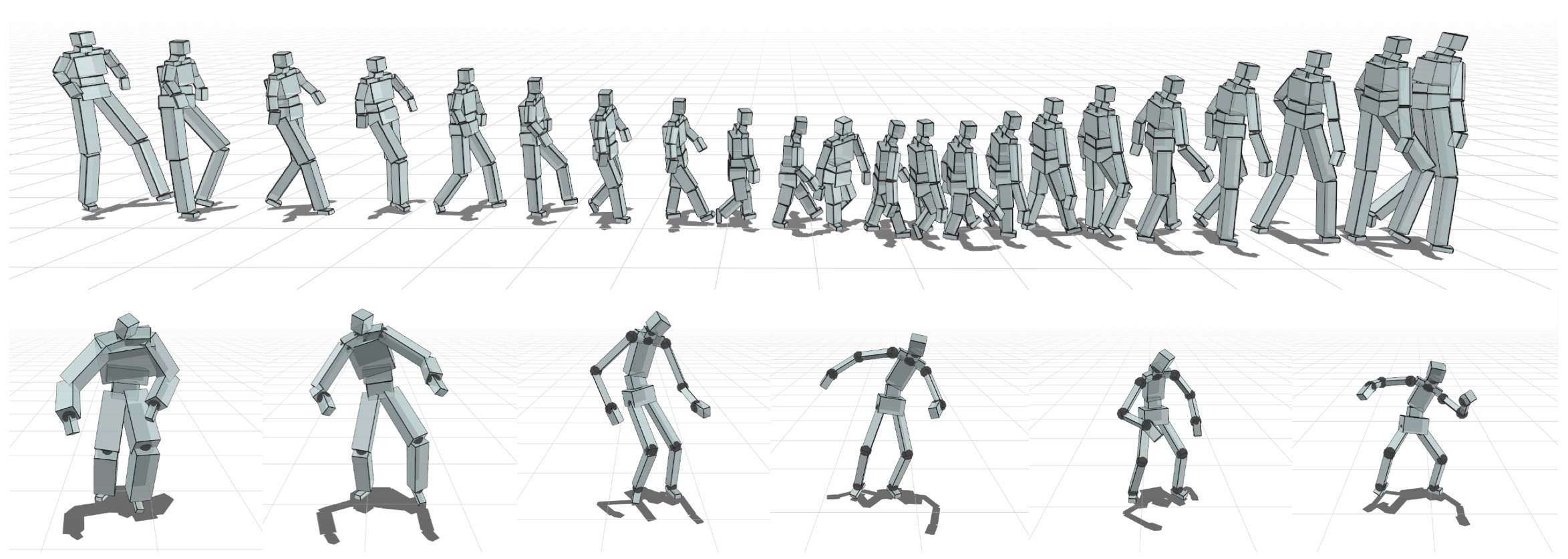

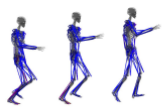

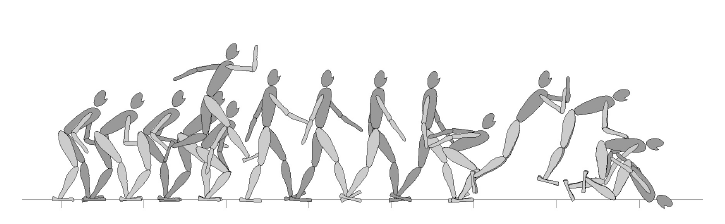

Generative GaitNet, SIGGRAPH 2022. Link

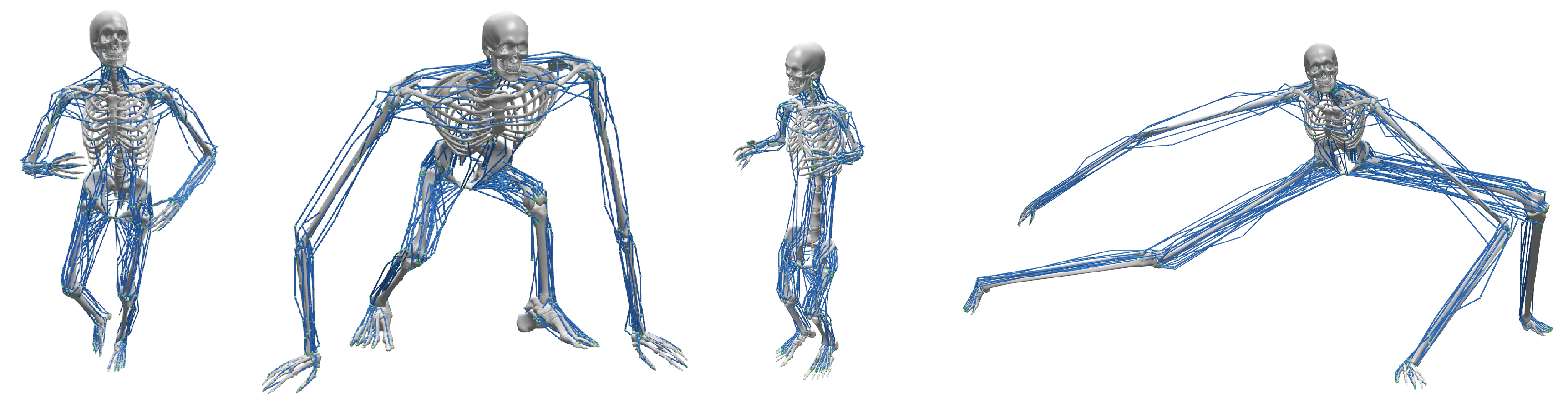

Deep Compliant Control, SIGGRAPH 2022. Link

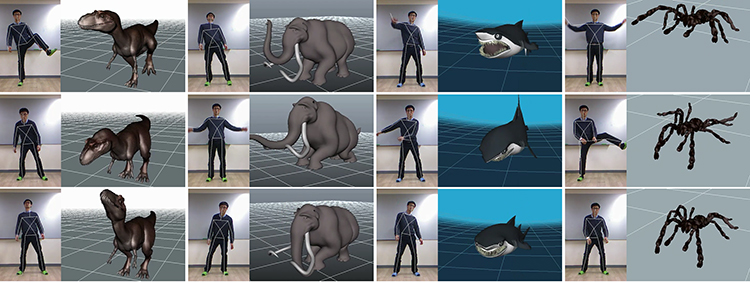

Human Motion Control of Quadrapedal Robots using Deep Reinforcement Learning, RSS 2022. Link

Human Dynamics from Monocular Video with Dynamic Camera Movements, SIGGRAPH Asia 2021. Link

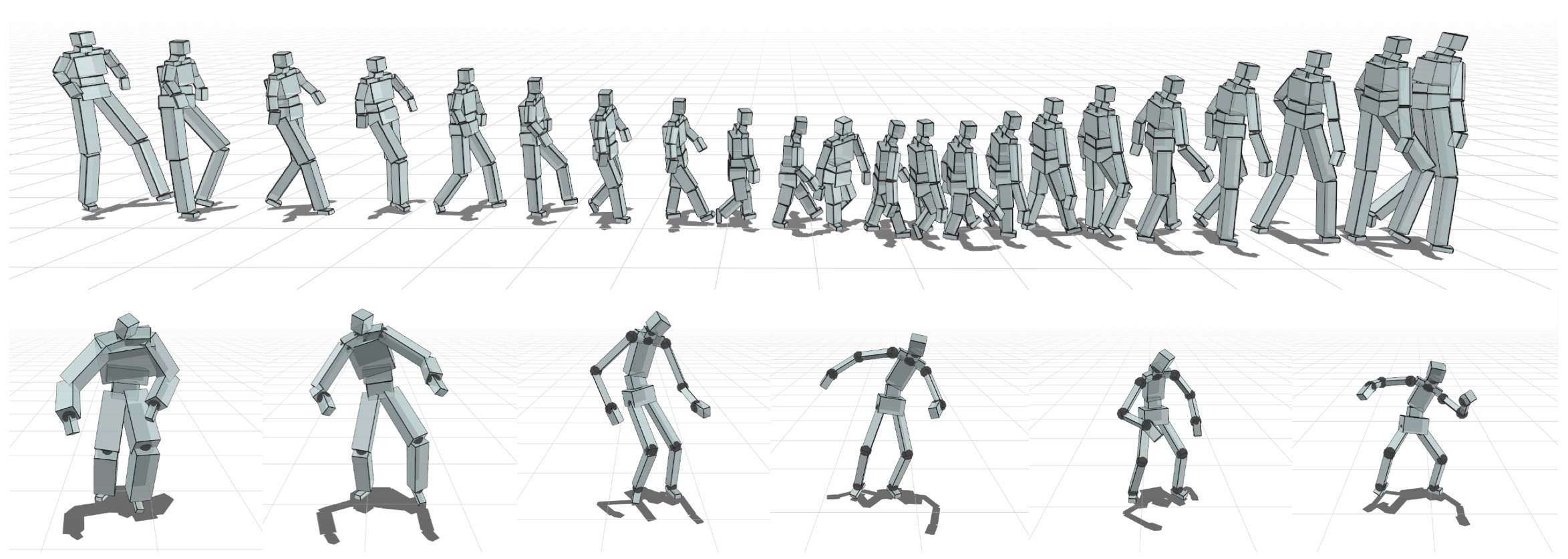

Learning a family of motor skills from a single motion clip, SIGGRAPH 2021. Link

Learning Time-Critical Responses for Interactive Character Control

, SIGGRAPH 2021. Link

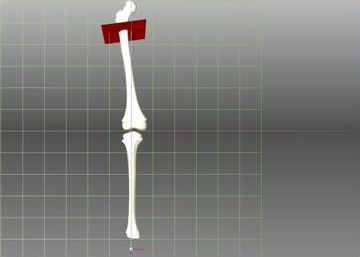

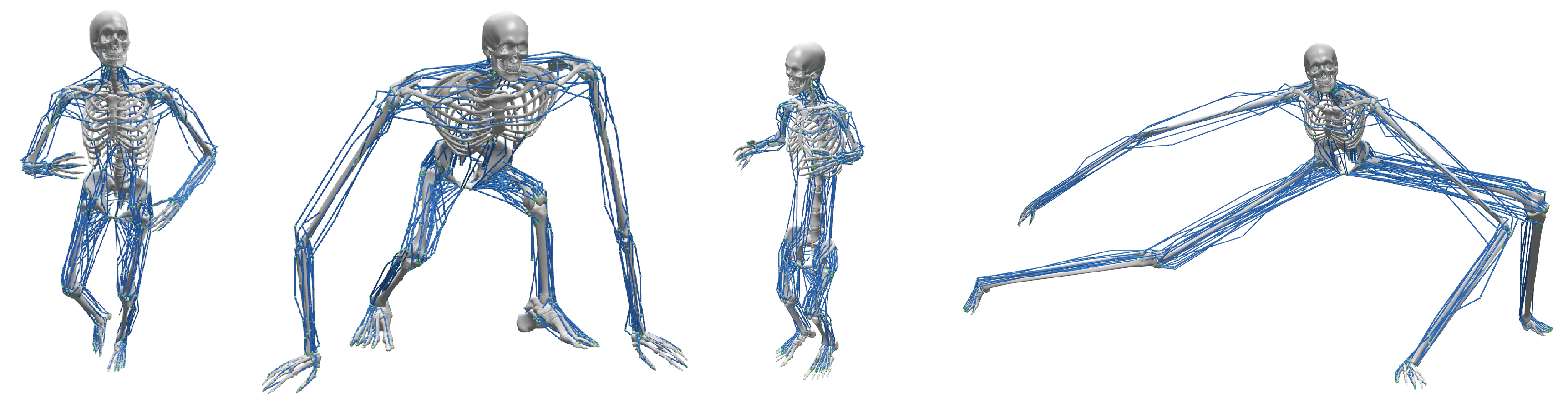

Functionality-Driven Musculature Retargeting, Computer Graphics Forum 2020. Link

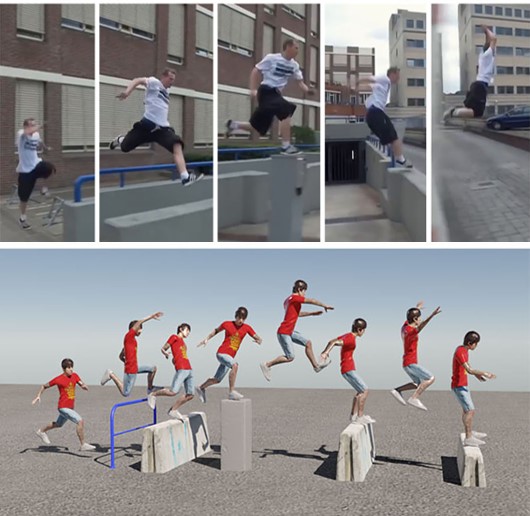

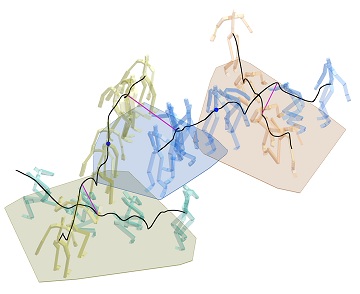

Learning Predict-and-Simulate Policies From Unorganized Human Motion Data, SIGGRAPH Asia 2019. Link

Learning Body Shape Variation in Physics-based Characters, SIGGRAPH Asia 2019. Link

SoftCon: Simulation and Control of Soft-Bodied Animals with Biomimetic Actuators, SIGGRAPH Asia 2019. Link

Figure Skating Simulation from Video, Pacific Graphics 2019. Link

Scalable Muscle-Actuated Human

Simulation and Control, SIGGRAPH 2019. Link

Aerobatics Control of Flying Creatures via Self-Regulated Learning, SIGGRAPH Asia 2018. Link

Interactive Character Animation by Learning Multi-Objective Control, SIGGRAPH Asia 2018. Link

Dexterous Manipulation and Control with Volumetric Muscles, SIGGRAPH 2018. Link

How to Train Your Dragon, SIGGRAPH Asia 2017. Link

Soft Shadow Art, Symposium on Computational Aesthetics 2017. Link

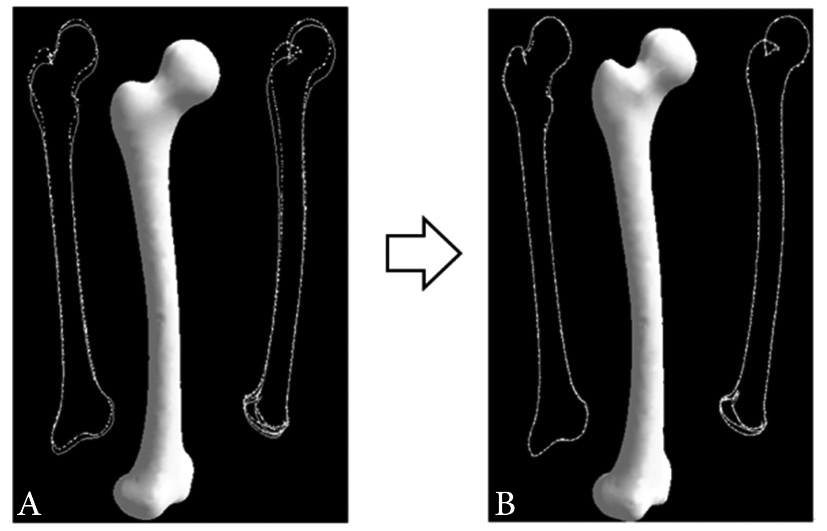

3D Bone Shape Reconstruction from Uncalibrated Radiographs, Medical Engineering & Physics 2017. Link

Shadow Theatre, SIGGRAPH 2016. Link

Motion Grammars for Character Animation, Eurographics 2016. Link

Push-Recovery Stability of Biped Locomotion, SIGGRAPH Asia 2015. Link

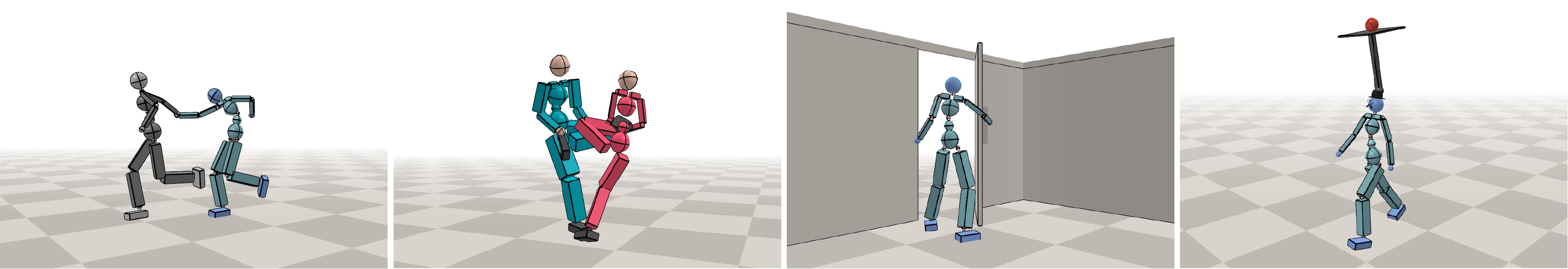

Generate and Ranking Diverse Multi-Character Interactions, SIGGRAPH Asia 2014. Link

Locomotion Control for Many-Muscle Humanoids, SIGGRAPH Asia 2014. Link

Interactive Manipulation of Large-Scale Crowd Animation, SIGGRAPH 2014. Link

Data-driven Control of Flapping Flight, ACM Transactions on Graphcis, 2013. Link

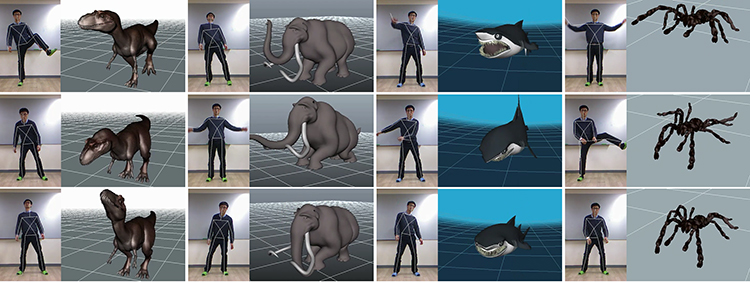

Creature Features: Online Motion Puppetry for Nonhuman Characters, SCA 2013. Link

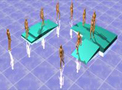

Tiling Motion Patches: Simulating Multiple Character Interaction, SCA 2012 and TVCG 2013. Link

Retrieval and Visualization of Human Motion Data via Stick Figures, Pacific Graphics 2012. Link

Deformable Motion: Squeezing into Cluttered Environments, Eurographcis 2011. Link

Morphable Crowds: Interpolating Navigation Styles, SIGGRAPH Asia 2010. Link

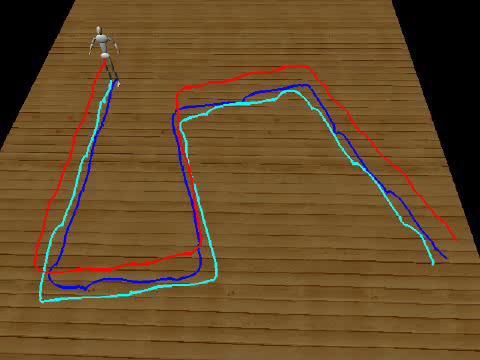

Data-Driven Biped Control, SIGGRAPH 2010. Link

Editing Dynamic Human Motions via Momentum and Force, SCA 2010. Link

Linkless Octree Using Multi-Level Perfect Hashing, Pacific Graphics 2009. Link

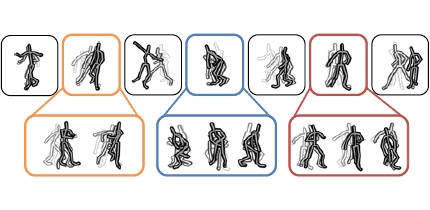

Synchronized Multi-Character Motion Editing, SIGGRAPH 2009. Link

Group Motion Editing, SIGGRAPH 2008. Link

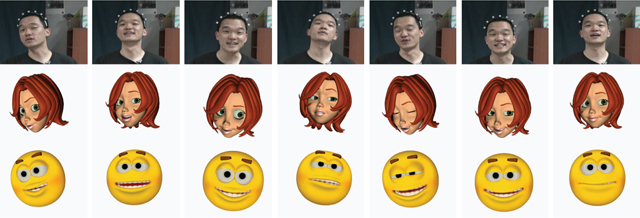

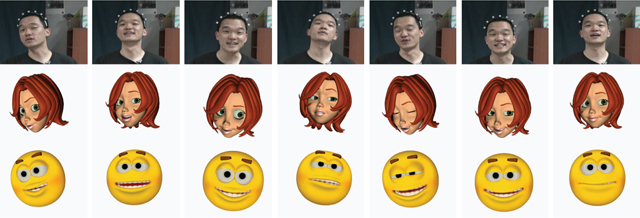

Expressive Facial Gestures from Motion Capture Data, Eurographics 2008. Link

Representing Rotations and Orientations in Geometric Computing, IEEE CG&A 2008. Link

Group Behavior From Video (Data-Driven Crowd Simulation), SCA 2007. Link

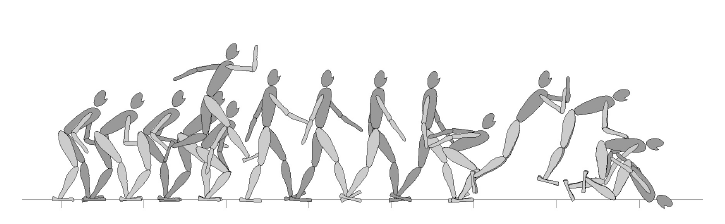

Simulating Biped Behavior from Human Motion Data, SIGGRAPH 2007. Link

Motion Patches: Building Blocks for Virtual Environments Annotated with Motion Data, SIGGRAPH 2006. Link

Low-Dimensional Motion Space, CASA 2006. Link

Reinforcement Learning with Motion Graphs, SCA 2004 and Graphics Models 2006. Link

Planning Biped Locomotion Using Motion Capture Data and Probabilistic Roadmaps, ACM ToG 2003. Link

Interactive Control of Avatars Animated with Human Motion Data (a.k.a. Motion Graphs), SIGGRAPH 2002. Link

General Construction of Time-Domain Filters for Orientation Data, IEEE TVCG 2002. Link

Computer Puppetry: An Importance-based Approach, ACM ToG 2001. Link

A Coordinate-Invariant Approach to Multiresolution Motion Analysis, Graphical Models 2001. Link

Hierarchical Motion Editing, SIGGRAPH 1999. Link

Two minute papers (May 27, 2022) This AI makes you a virtual stuntman! link

SCIENCE to the Future (May 13, 2022) 현실과 상상의 인터랙션으로 새로운 즐거움을 창조하다 link

Two minute papers (Sep 30, 2021) This AI stuntman just keeps getting better! link

Two minute papers (Oct 7, 2020) The AI Can Deal With Body Shape Variation! link

동아일보 (Nov 13, 2019) AI로 인체의 근육 움직임 세계 최초 재현 link

Two minute papers (Oct 19, 2019) AI Learns Human Movement From Unorganized Data link

Elements S4E82 (Aug 22, 2019) Why This Virtual Human Is Being Injured by Scientists link

80.lv (June 26, 2019) Next-Gen Simulation of Human Character Movement link

Two minute papers (July 16, 2019) Virtual Characters Learn To Work Out And Undergo Surgery link

Two minute papers (May 4, 2019) How To Train Your Virtual Dragon link

메디칼옵져버 (May 25, 2015) 북미소아정형외과학회 기초논문본상 수상 link

한국스포츠경제 (May 25, 2015) 북미소아정형외과학회 기초논문본상 수상 link

과학동아 (Nov 27, 2017) 상상 속 용, 이렇게 날았다...나는 법 스스로 학습하는 AI 나왔다 link

연합뉴스 (Nov 27, 2017) 만화·게임 속 '상상의 동물' 비행 모습 입체적 재현 link

로봇신문 (Nov 27, 2017) AI 이용 가상 비행생명체 자동제어기술 개발 link

KBS (Jan 1, 2017) 다큐멘터리3일 [482회] 열정, 미래를 열다 - 서울대 첨단과학기술연구팀 72시간 link

과학동아 (Dec 29, 2016) 서울대 공대 미래7대 기술 link

MBC News (Aug 1, 2016) 컴퓨터로 만드는 ''그림자연극'', 3D 기술로 재현, link

YTN Science (Aug 1, 2016) 빛의 예술 ''그림자연극'', 배우 대신 컴퓨터로 만든다, link

연합뉴스 (Aug 1, 2016) 그림자연극 속 ''숨은 동작'' 찾아내는 기술 개발, link

서울경제 (Aug 1, 2016) 컴퓨터 알고리즘으로 구현한 인체동작, link

디지털타임스 (Aug 1, 2016) ''그림자연극'' 동작 컴퓨터가 만든다, link

뉴스원 (Aug 1, 2016) 컴퓨터 알고리즘을 통한 그림자 연극의 창의적 동작 개발, link

아주경제 (Aug 1, 2016) 국내 연구팀, 컴퓨터 알고리즘 통한 그림자 연극 동작 개발, link

아시아경제 (Aug 1, 2016) 컴퓨터...그림자 연극에 뛰어들다, link

이데일리 (Aug 1, 2016) 국내연구진, 컴퓨터 알고리즘 통한 '그림자연극' 동작 개발, link

아이뉴스24 (Aug 1, 2016) 컴퓨터 알고리즘 활용, 그림자 연극 동작 개발, link

KBS News (Dec 15, 2014) Many-Muscle Humanoids, AVI

동아일보 (Dec 5, 2014) Previsualization, PDF

동아사이언스 (Dec, 2014) 공대가 좋아2 10회, Link

과학동아 (Dec, 2014) 3D 프린터로 하늘을 나는 새를 만든다, PDF

수학동아 (Oct, 2014) 생명체의 움직임을 재현한다, PDF

MBC News (Oct 24, 2013) Bird flight simulation, AVI

YTN 사이언스투데이 (Oct 24, 2013) Bird flight simulation, Link